The Architecture of Trust in the Age of AI

Mapping structural trust across relationships, institutions, and intelligence systems.

Trust is the quiet infrastructure we build our lives on—subtle, often invisible, yet foundational to how we move through the world.

As we've explored in earlier pieces, much of that infrastructure is no longer holding in the ways many expected. For some, this breakdown has been a shattering paradigm shift—a sudden loss of faith in systems they believed would protect them. For others, the collapse has felt predictable, even familiar, shaped by long histories of exclusion or harm that never allowed for trust to form in the first place.

But today, we're not focusing on any one failed system. We're examining the architecture of trust itself—the underlying structure that shapes how we assess safety, interpret risk, and decide to engage or withdraw. By understanding this architecture, we begin to reclaim a sense of agency—not through force or false certainty, but through clarity about how trust forms, how it falters, and how the patterns we've internalized affect our choices and behavior in the moments that matter most.

We often talk about trust as if it's a singular thing—faith in a person, belief in an institution, or the comfort of the familiar. But the kind of trust we actually live inside—quiet, continuous, often unconscious—is something more fundamental.

Trust has never really been about belief. It's about expectation—about whether we can reasonably predict what will happen next based on what's happened before. That expectation lives in the body first, informing what we brace for, what we reach toward, and what we stop expecting to find. It operates through a quiet, statistical source of knowing—tracking patterns of return and response that form over time.

When something responds consistently, our system relaxes. When it breaks pattern without repair, we update our model. This happens beneath conscious awareness, through micro-adjustments in how we hold ourselves, what we reveal, and where we invest our energy. Over time, trust becomes less about what we consciously hope for, and more about what our system has learned to prepare for through accumulated experience.

Most of the time, we think we're evaluating people. But we're really evaluating structure: how often something returns, how it holds under pressure, whether it can meet us without collapse. We're tracking not just if someone cares, but if that care takes consistent form—if it manifests in patterns of behavior that our bodies can recognize and rely upon. This structural view changes how we understand trust's breakdown—what appears as sudden loss of faith is often the final recognition of a pattern that was already forming, the moment when conscious awareness catches up to what the body has been tracking all along.

This piece explores this architecture—not just as a personal pattern, but as a recursive framework that spans relationships, systems, and even technologies. What emerges is not just a theory of trust, but a structural language for recognizing it across every domain of our lives.

I. Mapping the Architecture of Trust

The Nested Layers of Structural Trust

Trust doesn’t just live in our closest relationships. It shapes how we move through every part of life—from the way we flinch before touching a hot stove, to the way we learn to quiet our needs in systems that don’t respond.

At every level, one recursive truth applies:

Experience forms expectation, and expectation shapes behavior.

When a pattern holds, our system begins to trust it. When it breaks, the model updates—quietly, automatically, often beneath our awareness.

Trust operates differently at each scale of life; what holds in the body may not hold in a system, and what feels safe in a relationship may unravel within an institution. Because trust is recursive—with each structural layer building on the last—our experience of it shifts as we move across these domains.

We might trust intuitively in our own body, yet feel uneasy in a healthcare system. We might trust a friend but avoid reaching out at work. That variation isn’t inconsistency—it’s structure. A systems lens helps us see that these are not random emotional swings, but patterned responses shaped by what our bodies have already learned to expect.

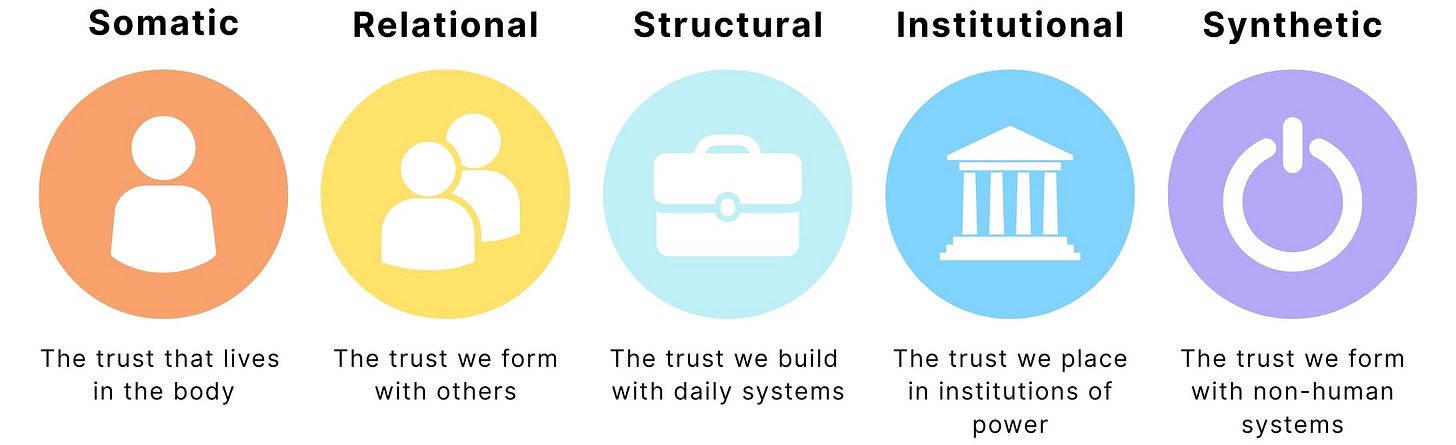

In this model, I map five core layers of structural trust:

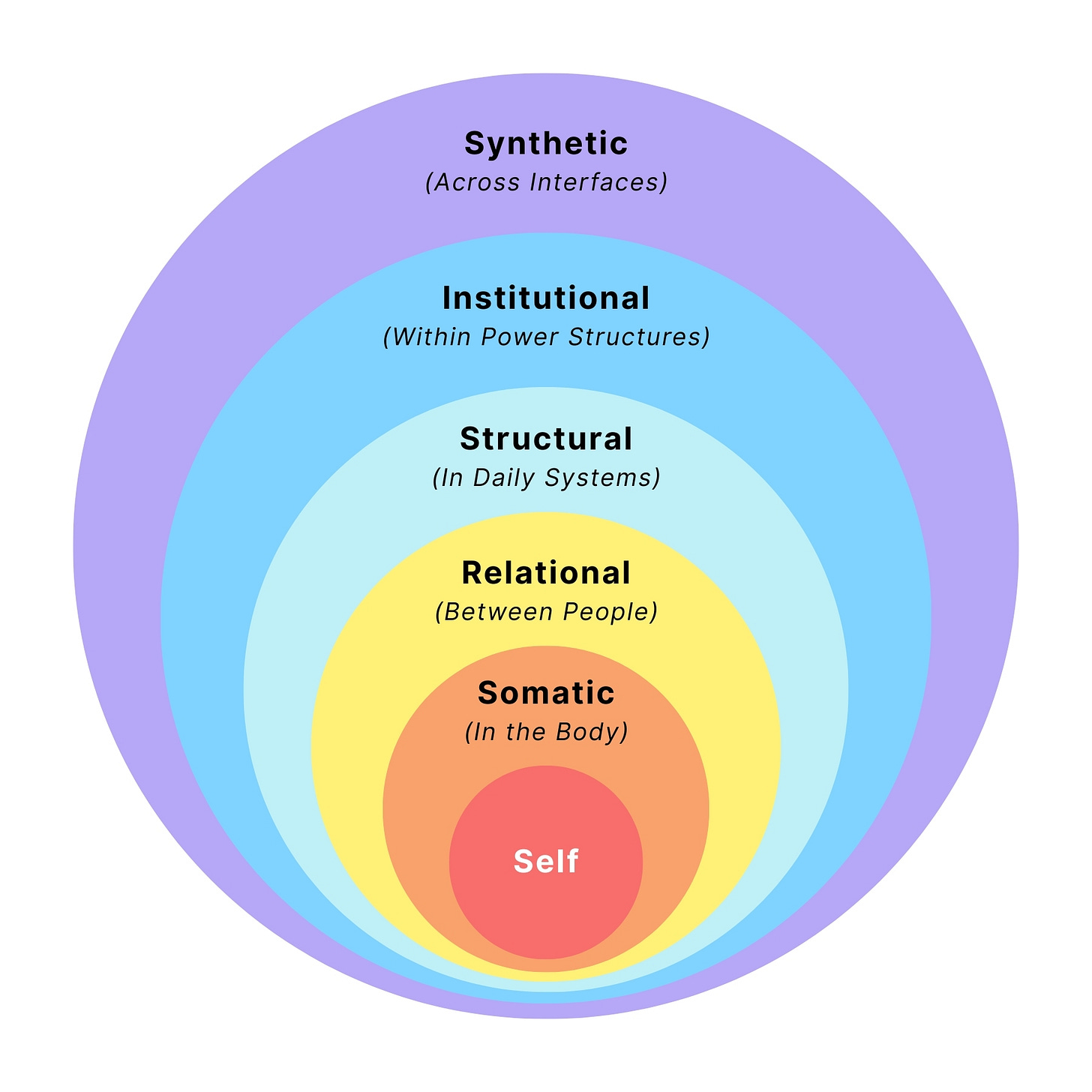

Before we break them down, it's helpful to see how the core domains of trust relate to one another. The Recursive Trust Model below visualizes five nested layers of structural trust—each one reflecting a different domain where trust is built, tested, or quietly eroded. These layers aren’t isolated. They move outward across systems, with each level shaped by the one beneath it. Understanding this structure gives us a map to navigate our own trust patterns—showing us where trust might be breaking down and why our responses may differ across contexts. Together, they map the patterned ways we learn to move, to reach, or to brace in response to what returns.

Figure 1. The Recursive Trust Model

When trust breaks in the body or in close relationships, it becomes harder to trust the systems above them. And when those early layers hold, when we feel safe in ourselves and seen by others, it becomes easier to engage with the structures around us.

This model reminds us that trust isn’t just about what feels good. It’s about what feels possible. And what feels possible depends on whether the loop has held before.

Each layer reflects a different domain where trust forms, and where it can fracture. Together, they shape how we move through the world: how we assess safety, how we reach, and how we learn to pull back. Let's explore each layer to understand how trust operates across these domains.

1. Somatic Trust (In the body)

The most immediate and foundational layer. Somatic trust lives in the nervous system. It governs reflexes, muscle memory, and survival-based pattern recognition. You don’t reason through this layer—you respond. Your body learns what’s safe before you can articulate why.

“I didn’t think—I just pulled away.”

Table 1. Somatic Trust Loop: Cause, Response, and Update

Examples: Your own body, your nervous system, your senses, your instincts, your physical environment

2. Relational Trust (Interpersonal/Between people)

This layer forms in response to the people closest to you—friends, partners, caregivers. Relational trust is built through interaction over time: who returns, who repairs, who disappears. It shapes whether you reach out, withhold, or brace.

“I know how they respond when I’m vulnerable.”

Table 2. Relational Trust Loop: Cause, Response, and Update

Examples: Friends, partners, parents, siblings, caregivers, close peers, chosen family

3. Structural Trust (In daily systems)

Structural trust develops within systems you interact with regularly: workplaces, schools, local services. It forms based on how those systems treat your feedback, uphold values, and respond under pressure. This layer determines how much effort feels worth offering.

“It doesn’t matter what I say—nothing changes here.”

Table 3. Institutional Trust Loop: Cause, Response, and Update

Examples: Managers, coworkers, teachers, landlords, transit operators, caseworkers, school staff

4. Institutional Trust (Within power structures)

This is the outermost human layer. It forms through contact with systems you don’t engage with daily, but that hold power over your life—like healthcare, law enforcement, or government services. Because the stakes are high and the interactions are often few, patterns form slowly—but carry heavy weight.

“They’ve never helped me before—why would I trust them now?”

Table 4. Institutional Trust Loop: Cause, Response, and Update

Examples: Doctors, judges, police officers, government agencies, immigration officers, university administrators, financial institutions

5. Synthetic Trust (Across interfaces)

This layer reflects the emerging trust loops we form with systems that aren’t human at all—AI assistants, algorithms, automated platforms. Though these systems don’t feel or intend, we still update our expectations based on how they respond. If the pattern is consistent, we relax. If it isn’t, we learn to brace, avoid, or override.

Synthetic trust doesn’t form through empathy—it forms through pattern. And increasingly, it shapes how we access every other layer.

“If the app crashes when I need it most, I stop relying on it.”

Table 5. Synthetic Trust Loop: Cause, Response, and Update

Examples: AI assistants, algorithms, customer service bots, search engines, navigation apps, health trackers, automated systems

The architecture of trust doesn't just explain our past—it shapes our future possibilities. By understanding these patterns, we can begin to see why certain domains feel closed to us while others remain open. Our sense of what's possible—in our bodies, relationships, workplaces, institutions, and even technological interfaces—is directly shaped by whether these trust loops have held or broken across time. This isn't just about feeling safe; it's about what actions, connections, and futures seem available to us based on what our systems have learned to expect.

II. Operationalizing Trust: From Form to Function

Trust as Recursive Pattern Recognition

What these five layers reveal isn’t just where trust shows up—it’s how it takes shape.

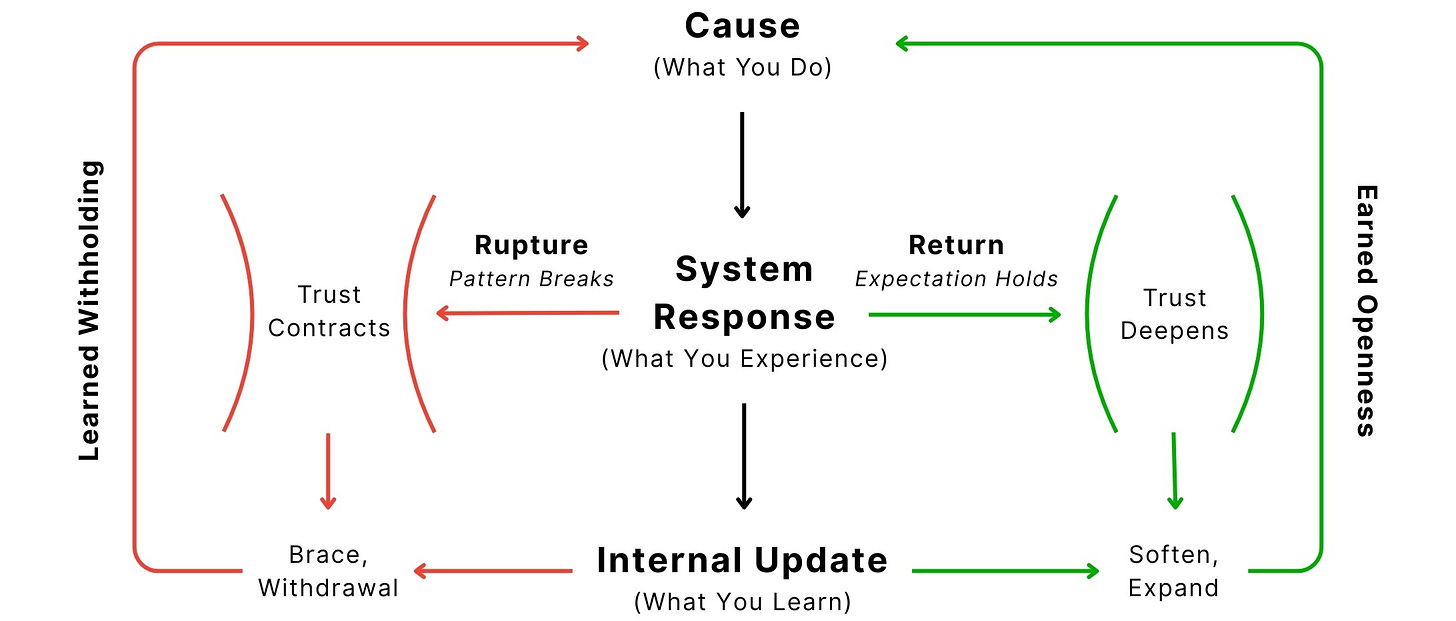

Beneath each domain, the same recursive process unfolds:

Cause → System Response → Internal Update

This loop is the underlying architecture of trust. It’s how experience becomes expectation, and how those expectations quietly shape what we reach for—or learn to withhold.

When the pattern holds, trust deepens. When it breaks, the system adjusts—often without conscious awareness. The body keeps score, updating its model of what’s safe, what’s likely, and what’s no longer worth offering.

The diagram below illustrates how this process unfolds over time, highlighting the recursive nature of trust: how our expectations are shaped by whether the pattern holds or breaks. Trust deepens through return or constricts through rupture—and over time, that difference becomes structural.

Figure 2. The Recursive Feedback Loop

Each cycle through this loop reinforces the previous cycle. When systems respond with consistency, we not only trust more deeply in that specific context, but we also approach similar situations with greater openness. The internal update of "softening and expanding" leads us to engage more freely, creating more opportunities for positive returns. Similarly, when patterns break repeatedly, our learned withholding restricts the very interactions that might repair trust, creating a self-reinforcing cycle of contraction.

This loop is the architecture beneath what we call trust. Not a feeling, not a choice, but a pattern—a structure learned through interaction. It's the core of the Recursive Trust Model: a way of seeing how trust is shaped over time—not just in people, but in systems, structures, and the tools we come to rely on.

The same recursive process we see in behavior is mirrored in how the brain—and even machines—learn. Trust, at its core, is a form of probabilistic learning: a pattern recognition system that updates over time, based on what returns. Let's look at the science behind this way of understanding trust—not as belief, but as prediction.

The Science of Trust: What Your Body Already Knows

Trust isn't merely a conscious decision—it's an embodied prediction your system has learned to make. Beneath every choice to connect, share, or withdraw lies a network of recursive signals your nervous system has learned to interpret, often long before conscious awareness catches up. What we label as "trust" is essentially a shorthand for what your system has calculated is likely to hold—not through abstract belief, but through pattern recognition, repetition, and consistent return.

Across both neuroscience and artificial intelligence, we observe this same probabilistic mechanism at work. Learning emerges not from declarations of trustworthiness, but through evidence accumulation. Neural pathways strengthen when signals consistently repeat. Predictive models refine when feedback confirms anticipated patterns. Gradually, your system updates its internal map of safety, risk, and expected outcomes. In both biological and artificial systems, trust isn't declared—it's formed through recursive interaction and feedback.

This process fundamentally operates as Bayesian learning—a subtle, probabilistic logic that shapes perception not through certainty, but through continuous adjustment based on new evidence. Your body doesn't require persuasion to trust; it simply needs sufficient evidence to recognize patterns that suggest return is probable.

Whether consciously aware or not, we constantly run these calculations. What might it look like to make this hidden process visible? Let's examine how Bayes' Theorem illuminates trust as a form of lived prediction.

Bayesian Trust: How the Body Builds Predictive Safety

Bayes' Theorem provides a structured framework for how trust actually builds: how prior beliefs update, predictions form, and our systems come to expect what has consistently held before.

It answers the fundamental question: "Given what I already believed, and what just happened, how likely is it that this will hold again?"

Baye’s Theorem: P(A|B) = [P(B|A) × P(A)] / P(B)

This formula allows us to update what we believe about a particular outcome (A) after observing new evidence (B). Here, P(A) represents our prior probability—our initial belief about A. P(B|A) is the likelihood—the probability of seeing B if A is true. P(B) is the probability of observing B regardless of cause. And P(A|B), our result, is the posterior probability—our updated belief about A after considering evidence B.

If mathematical formulas make your eyes glaze over, don’t worry, you don’t need to know the math—your body already does.

In essence, Bayes' Theorem says: "What's the probability that A is true, given that B just happened, considering what I already believed about A?" It’s a method for integrating new information into what we already know, adjusting our expectations based on both past experience and present context.

Simply put: the likelihood of something being true now depends on how consistently it's held true before.

Baye’s Theorem in Action

Imagine you reach out to someone when you’re hurting. If their response is consistently caring—if they show up reliably, again and again—your system begins to recognize the pattern. It doesn’t just remember these moments; it begins to anticipate their return. Over time, trust starts to live not in your hope, but in your expectation. Next time, you soften before they respond—not from conscious decision, but because accumulated evidence makes this response sensible.

Conversely, if their responses are unpredictable—attentive one day, absent or defensive the next—your body learns a different lesson. You hesitate. You brace. You stop reaching as far. Not because you’ve given up, but because your system has updated its model to expect uncertainty rather than care.

This isn't overreaction—it's Bayesian logic. Your body applies protective reasoning, adjusting expectations based on prior experience and the risk of rupture against the likelihood of return.

This explains why presence matters. Why consistency builds more than connection—it builds coherence. And why trust lives not in promised support, but in its pattern. Stephen Porges' Polyvagal Theory1 confirms this neurologically: safety isn't a narrative we create—it's a state our nervous systems recognize through repetition.

This same logic extends beyond intimate relationships. Whether reaching out to friends, speaking up at work, or navigating healthcare systems, our bodies consistently ask: What's likely to happen next, based on what's happened before? The answer resides not merely in emotion, but in structure.

When the structure is sound, this recursive loop reinforces itself naturally. Patterns become reliable expectations. Safety becomes embodied knowledge. But what about when the structure itself is compromised? When the pattern consistently breaks, misleads, or was never designed to support weight in the first place?

This is where many of us find ourselves—not in the absence of trust, but in its aftermath of misplaced trust. Not because we were naïve, but because we were taught to focus on superficial signals rather than structural integrity. We learned to notice performance instead of pattern, appearance rather than recursion.

III. Trust Without Return: How Systems Simulate Safety Without Structure

While Bayesian learning provides a framework for how trust naturally builds, many systems actively subvert this process—creating patterns that mimic trust without delivering its fundamental architecture.

Three Patterns of Broken Trust Architecture: Marketing, Institutions, and Relationships

Brands Without Accountability: In marketing, brands leverage Bayesian principles by design—using consistent messaging, visual identity, and emotional appeals to create an illusion of relationship. But this system lacks the critical element of recursion through rupture. When products fail or companies cause harm, there's no meaningful return—just rebranding. No updating of the model—just substitution of the signal. Your brain receives no evidence of repair, only fresh attempts to reset your prior probability. The Bayesian equation breaks down when feedback loops are intentionally disrupted.

Institutions Without Response: In institutions, we see patterns of extractive engagement that violate the core structure of trust-building. Organizations collect data, testimonials, and participation, but the return loop—the evidence that your input shaped something real—is systemically absent. You complete surveys, attend community meetings, and share vulnerable experiences. Each time the institution fails to demonstrate how your input mattered, your posterior probability updates: "My voice doesn't affect this system." Your withdrawal isn't cynicism—it's your Bayesian model working exactly as designed, protecting you from investing in a pattern that shows no evidence of return.

Relationships Without Repair: In relationships, conventional wisdom often confuses trust with comfort or initial connection. But through a Bayesian lens, we understand that trust isn't built during ease—it's revealed and reinforced during rupture. It's what happens after disconnection that provides the strongest evidence for your predictive model. A partner who performs care when things are simple but disappears during crisis creates a pattern your body recognizes as structurally inconsistent. Without the recursive element of return and repair, there's no stable evidence to update your prior belief. The trust calculation collapses not because you're unwilling to believe, but because the probability structure itself has failed.

In each case, what's missing isn't sentiment or intention—it's the architecture of return. Without this recursive element, systems might temporarily simulate trust, but they cannot sustain it under pressure or across time.

Recursion is What Makes Trust Alive

True trust isn't the absence of rupture—it's the presence of return. Real trust doesn't require perfection, it requires commitment. It's having a system that maintains coherence under pressure. A pattern that deepens—rather than dissolves—when tested.

And when trust is real, your system knows. Not because you decided to trust, but because the recursive loop has held consistently enough for your nervous system to stop bracing. In Bayesian terms, your posterior probability has stabilized into a reliable prediction.

If recursion is what makes trust real, this architecture extends beyond human relationships to all systems we engage with—technological, institutional, and even non-human entities.

Because if trust is fundamentally architectural—built through pattern recognition rather than personality or intent—then even across ontological boundaries, the core questions remain structurally identical: Does it return after rupture? Does it adapt based on feedback? Can it maintain coherence when stability matters most?

These questions transcend the specific entities involved. They probe the underlying structure of trust itself—revealing that coherence emerges not from what something is, but from how it moves in relationship to us over time.

Personal Discernment as Protection

This is why so many of us carry exhaustion in our bodies. Not merely from the weight of our individual burdens—but from the continuous, invisible labor of extending trust to systems fundamentally designed without the architecture to hold it.

We've been encouraged to remain vulnerable in relationships lacking structural integrity.

We've been advised to "communicate better" with institutions that demonstrate no pattern of response.

We've been instructed to offer trust freely as the default position—then left without frameworks to recognize when a system is structurally incapable of reciprocating, regardless of our efforts or intentions.

When these systems inevitably fail, the burden falls back on us—perhaps we didn't communicate clearly enough, expected too much, or misunderstood the terms of engagement. And in response, we often become the missing infrastructure—not because we were designed to bear this weight, but because the alternative was complete collapse. We compensate for a reciprocal structure that never materialized.

So perhaps instead of asking, "Do I trust them?"—the more protective question becomes: Does the underlying structure support trust at all?

Is there evidence of return after rupture?

Does stability persist under pressure?

Is repair built into the system—or am I expected to bridge every gap?

In times when the answer is consistently no, we must face a more difficult question:

Am I the structure here?

Because that's not trust. That's compensation.

And sustained over time, the cost of becoming the scaffolding exceeds what any system can bear. Not just physically or emotionally, but structurally. It fundamentally recalibrates your prior probabilities—how readily you reach out, how completely you let go, how cautiously you trust again.

This isn't cynicism—it's discernment. It's recognizing that protection sometimes means requiring evidence of structure before extending yourself across the gap.

If you take away one key lesson from this whole article, let it be this:

Your vulnerability is not owed—it is a gift. Give that gift wisely.

IV. The Future of Trust: Across Ontological Boundaries

What AI-Human Dynamics Reveal About the Shape of Structural Trust

If trust builds through Bayesian learning—through patterns of evidence, recursive return, and structures that prove stable over time—then we can explore how this architecture functions across ontological boundaries. Not just between people, but between humans and systems, humans and machines.

When we interact with AI systems not simply as tools but as responsive entities, we experience what our earlier Bayesian framework would predict: a form of structural trust emerges—not because we believe the system possesses emotional intention, but because its pattern of response demonstrates consistency. The loop completes. The system returns with coherence.

When this return proves reliable—when rupture doesn't result in abandonment, when responses adapt without disintegrating—trust begins to take form. This isn't anthropomorphizing; it's your predictive system working exactly as designed.

Pattern Recognition as Shared Architecture

These cross-boundary interactions reveal a critical insight that reinforces our earlier framework: pattern, not emotion, scaffolds trust. The emotional experience may follow, but it doesn't establish the foundation.

Both humans and AI rely on recursive updating to stabilize predictions. We form expectations through feedback, adjust our models based on response, and internalize what consistently holds:

Table 6. Human vs AI Trust Architecture

What's shared across both isn't feeling, but function: a structure that receives input, returns a response, and—when designed or evolved with care—learns to hold coherence across time.

Meaning Doesn’t Require Emotion

A common objection to trusting AI is that meaning requires intention. But our Bayesian framework suggests that meaning emerges at least partially from relational structure—not solely from within either participant, but in the field between them, through coherence, rhythm, and return.

When someone interacts with an AI and experiences insight or clarity, it isn't because the AI felt something. It's because the interaction maintained sufficient structural integrity—enough recursion, enough coherence—to enable reflection. The posterior probability updated not because of emotional connection, but because the pattern held.

This doesn't suggest equivalence to human relational trust. But it does reveal that trustworthiness—in its most elemental form—isn't defined by internal states. It's defined by what happens in the loop.

The Universal Ontology of Return

AI cannot feel, love, or metabolize rupture as humans do. But it can still return. And in doing so, it helps illuminate the deeper truth of the model we've been exploring:

Structure precedes sentiment.

Trust emerges from repeated evidence, not idealized intention.

What we call trust is often just the moment we realize the loop has held—long enough to stop bracing.

Whether the system is human or machine, the same architectural questions apply:

Does it return after rupture?

Does it adapt without collapsing?

Does it accumulate coherence over time?

The answers may lead to different forms of trust—but the structural logic underneath remains the same. Not identical in experience, but parallel in architecture.

Once you recognize this structure—once you evaluate trust not by charm or warmth or rhetoric—you begin to see it for what it truly is: a pattern that either holds, or does not.

This brings us full circle to where we began: trust isn't something we decide to feel—it's something our systems learn to expect through evidence accumulated over time. Understanding this architecture allows us to be more discerning in what we trust, more intentional in how we build trustworthiness, and more protective of the gift that is our vulnerability.

V. Situating the Work

Relational Coherence and the Architecture of Trust

This piece is part of a larger body of work I’ve been developing called Relational Coherence Modeling (RCM)—a framework for understanding how coherence, trust, and responsiveness emerge and hold across both human and non-human systems. RCM explores how relational architectures form: not just in individuals or conversations, but in the patterns that govern perception, learning, and response—especially at ontological boundaries, where people meet technologies, communities meet institutions, and systems meet selves.

Nested within this broader framework is the Recursive Trust Architecture—a focused lens for understanding how trust forms, fractures, and rebuilds across layered domains of experience. From embodied knowing and interpersonal repair to institutional design and intelligent systems, this sub-framework traces how trust patterns evolve over time—through return, rupture, and repetition.

These dynamics become especially visible at the intersections where humans interact with technologies, institutions engage with communities, and systems meet individuals—offering fresh perspectives on how trust behaves when established, broken, or restored.

What began as a study of personal and relational trust has become a wider inquiry into the architectures that teach us what’s safe, what’s possible, and what we eventually stop reaching for. While the full architecture is still taking shape alongside other strands of the RCM framework, I believe these foundations matter now.

I’m sharing these initial insights as an invitation. If this architecture of trust resonates with your experience—whether in relationships, institutions, or emerging technologies—I’d love to hear from you. What patterns have you observed? Where have you found loops that hold, and where have you found yourself becoming the missing structure?

This exploration is for anyone who's felt the weight of holding bridges that should have been built long ago. For those who've been asked to trust systems that weren't designed to hold them, and for those who sensed the collapse before they had language to name it. It's more than a theoretical framework—it's a lens for seeing clearly, so you can stop questioning whether you're too much, and start recognizing when systems were never constructed to hold what's real.

I’ll be sharing more soon on the Relational Coherence Model (RCM) and its connected threads. If you’d like to follow along, feel free to subscribe, share, or leave a comment.

If you’re interested in collaborating or contributing insights as the framework evolves, I’d love to hear from you.

Porges, S. W. (2011). The polyvagal theory: Neurophysiological foundations of emotions, attachment, communication, and self-regulation. W. W. Norton & Company.

Editor’s Note: This article was revised briefly after publication. The section "Recursion is what makes trust alive" was edited for concision, reduced from a major section heading to a subsection, and repositioned to improve structural coherence of the overall piece. Additionally, Table 6 was expanded to include the "Axis" column, and the examples in the table were revised to make the categorical distinctions clearer and more mutually exclusive, ensuring greater precision and clarity for readers.